Article

The Current State of Edge Computing

Throughout the history of computers, data processing workloads have migrated from company premises to outside data centers to cloud repositories and now to “edge” locations.

Why the shift, you might ask? To strengthen the reliability of apps and digital services, reduce costs of running data centers, and lessen the distance information has to travel.

That doesn’t mean people won’t use cloud centers anymore; a lot of data needs to be processed and stored at centralized sources. But the field is certainly shifting toward edge computing.

Current State of the Edge Computing Industry

Technology has swung between centralized and decentralized computing several times in the past. For example, in 1964 the IBM 360 was a centralized computing unit that performed all operations within a closed system. Compare that to current PCs, and you see that new computers perform more decentralized actions, sending and receiving data processed on countless remote servers.

The cloud is an example of a shift back towards centralized computing. A cloud server is the central computing unit where data from many other machines gets stored or processed. But at the same time, we’re moving towards decentralization with blockchain technology, which eliminates a central authority for processing transactions and instead divides the work among many machines.

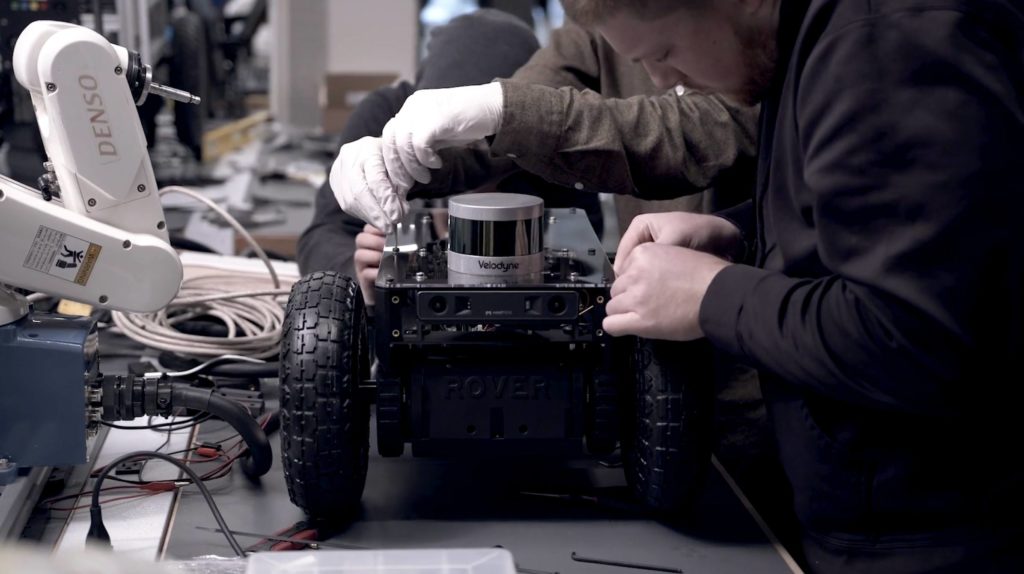

Edge computing marks another shift towards decentralization. Its usefulness is apparent when you look at the emerging IoT industry. Moving the countless processes that an IoT system is constantly performing away from a centralized cloud and onto nearby devices eases strain on central servers, keeping IoT projects fast and agile in spite of its size.

Edge computing is also important to IoT because these devices aren’t always connected to the internet. IoT connectivity solutions are still in their early stages and therefore may not be completely reliable for most at-scale IoT projects. So keeping the computing on or closer to the devices themselves rather than having each device rely on a remote server means that devices can still perform their functions when outside of connectivity.

Companies like Microsoft, Amazon, Google, Dell, IBM, and Cisco are all working on edge computing development.

Amazon’s Three Laws of IoT

Amazon, one of the leaders in edge computing, laid out three basic laws that it uses to guide its edge computing and IoT development.

- The Law of Physics. The first law of IoT is that physical limitations should be considered during IoT project development, and not just the technological or digital limits. Going back to the autonomous vehicle example used earlier: there’s a real, physical need to process data locally on the vehicle. Even though the data can be sent to a cloud, the Law of Physics determines that local processing is the correct answer.

- The Law of Economics. The second law takes financial feasibility into account. For most companies—if not all—IoT systems are an extreme financial undertaking. One of the most significant costs is keeping everything connected through a service provider. The less data devices send over a network, the more financially viable an IoT project will be.

- The Law of the Land. Lastly, the third law refers to the regional laws that an IoT project must abide by. This could be literal laws—GDPR, for example—or simply limitations of a region’s infrastructure.

Considering these laws while looking at IoT and edge computing possibilities will help keep us grounded and realistic about the world of edge computing.

Key Stats for the Future of Edge Computing

- According to Gartner, the number of IoT devices will increase from 30 billion to 50 billion by 2020, concurrently increasing the demand for edge computing.

- Between 2017 and 2025, the global market for edge computing analytics is expected to have a compound annual growth rate (CAGR) of 27.6%.

- Hybrid Cloud Solutions predicts that 5.6 billion IoT devices will rely on edge computing for data collection and processing in 2020.

- 70% of CEOs are aware of edge computing and have edge computing initiatives within their organization.

- The top three industries looking to adopt edge computing are smart cities, manufacturing, and transportation.

Wrap Up

Edge computing is another periodic shift in focus in the world of data processing. Right now, we’ll be seeing a mix of centralized and decentralized data processing supporting billions of IoT devices. With more data being produced in more locations, issues surrounding security, privacy, and infrastructure will become a higher priority than they already are.