Podcast

The Future of Generative AI

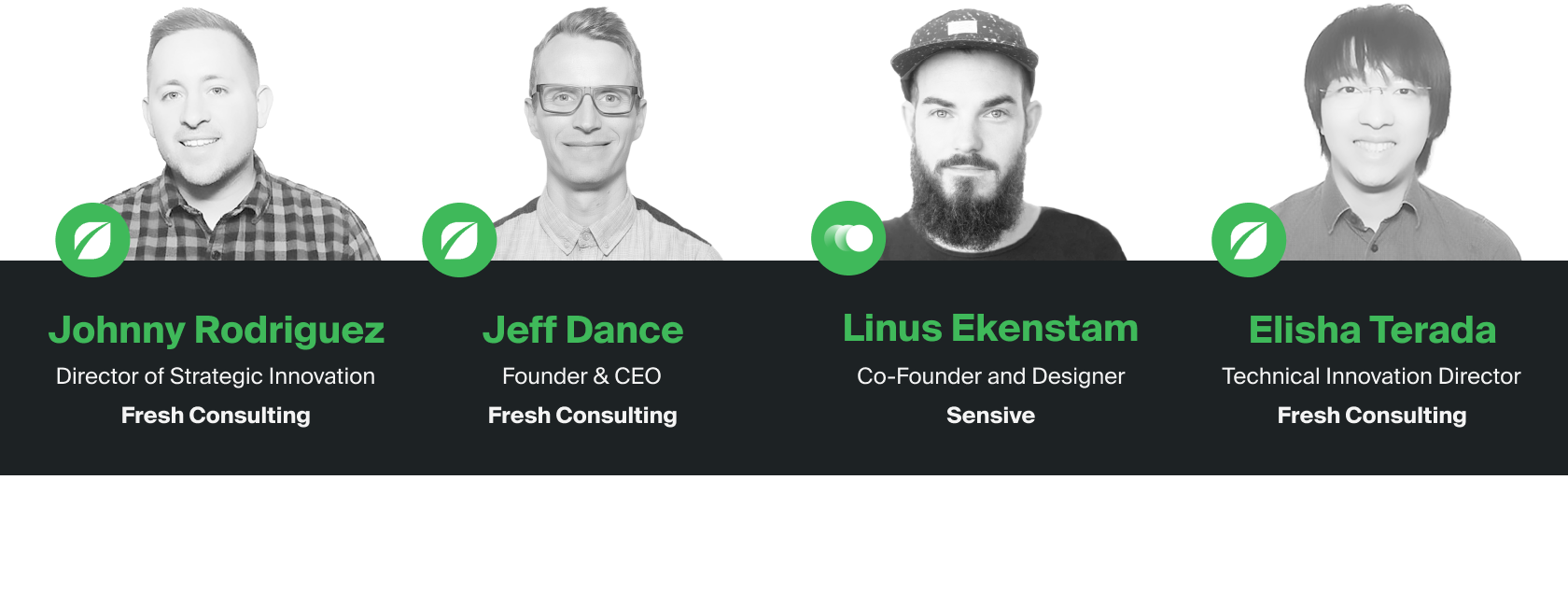

Johnny Rodriguez, Director of Strategic Innovation at Fresh Consulting, Elisha Terada, Technical Innovation Director at Fresh Consulting, and Linus Ekenstam, Co-founder and Designer of Sensive, joins Jeff Dance to discuss the future of generative AI. They explore how generative AI differs from other types, some of its most promising applications today, and how generative AI will look in ten to twenty years.

Linus Ekenstam – 00:00:03: The impact of these technologies will be so big that it’s really hard to comprehend with what we know today, how it would change the world that we live in. And it’s up to us.

Elisha Terada – 00:00:13: Even though it might replace some of your work, it might replace it in a good way. Maybe having open-minded way to what else can I do with the available time now that I have might be one of the approach.

Johnny Rodriguez – 00:00:25: People become less scared when they’re able to understand it better as well. I think our minds fill in the gap sometimes.

Jeff Dance – 00:00:34: Welcome to The Future Of, a podcast by Fresh Consulting, where we discuss and learn about the future of different industries, markets, and technology verticals. Together, we’ll chat with leaders and experts in the field and discuss how we can shape the future human experience. I’m your host. Jeff Dance. In this episode of The Future Of, we’re joined by Linus Ekenstam, Co-founder at Sensive and Bedtime Stories. We’re also joined by Johnny Rodriguez and Elisha Terada. Both are Directors at Fresh Consulting, leading our Labs division. They’re also Co-founders of Brancher.ai. We’re here to explore the future of generative AI. It’s a pleasure to have you guys with us on this episode, focused on such an important and trending topic right now.

Johnny Rodriguez – 00:01:26: Yeah, thanks for having us.

Linus Ekenstam – 00:01:27: Thank you.

Elisha Terada – 00:01:27: Thank you for having us.

Jeff Dance – 00:01:29: Let’s start with some introductions. Linus, if we can start with you. Can you tell the listeners a little bit more about your background and experience?

Linus Ekenstam – 00:01:35: Yeah, perfect. So my name is Linus Ekenstam, and I am a designer by trade. So I got about 15, almost 18 years under my belt product design, and for the past half year or so, six months, I’ve been putting my feet or my knees and my ankle, like everything and my entire body into generative AI and large language models. I’m breathing, sleeping, dreaming. AI at the moment managed to share my learnings online with an audience on Twitter and in a newsletter. So it’s been really fun to grow alongside others trying to get their feet wet. So I guess I call myself an educator.

Jeff Dance – 00:02:11: Love it. We’ve been learning from you too, and I think we noticed that you have like 82,000 followers on Twitter, and your newsletter now has as many as 12,000 subscribers. So I think a lot of people are listening and we’re grateful to have you with us today.

Linus Ekenstam – 00:02:27: So humbling, thank you so much.

Jeff Dance – 00:02:29: Thank you. Johnny, can you tell the listeners a little bit more about yourself?

Johnny Rodriguez – 00:02:33: Sure, yeah, happy to. My name is Johnny Rodriguez, director of Strategic Innovation here at Fresh. Coming up on ten years at Fresh Consulting, and I would say in my career I’ve had a lot of focus on emerging technology throughout the years, have spent a lot of time with augmented and virtual reality, a lot in this AI, ML space. Lots of interesting trends that have happened I think since I’ve gotten into my professional career. I am also a designer and a developer by trade, so I’ve done a lot there in the UI/UX space, as well as overseeing the development of quite a lot of projects and products during my time here at Fresh as well. And yeah, I think my connection to generative AI, I would say probably started before I helped co-found and create Brancher.ai with Elisha. But I think that was where we really kind of dove-deep and really try to apply this kind of goal that we’ve had for a really long time of democratizing emerging technology. And so we created this product and it’s kind of allowed us to we’ve had to kind of keep our finger to the pulse on everything happening in the generative AI space, which is fascinating and fast growing and fast-changing. So that’s a little bit about me.

Jeff Dance – 00:03:49: Thanks. Johnny, you’ve launched over 20 products, apps and websites at Fresh, so your experience is deep, and having someone on the front lines at a technology innovation company that’s keeping us fresh has been awesome. But your depth in generative AI and also in kind of the XR spaces seems unparalleled. So thanks for being here with us. Elisha, over to you, bud.

Elisha Terada – 00:04:14: Hey, my name is Elisha Terada. I’m Director of Technical Innovation at Fresh Consulting at this point. I’ve been with Fresh probably for about twelve years now, a little bit longer than Johnny. And just like Johnny, I’ve always been interested in exploring the Fresh new technology, as we call ourselves, Fresh Consulting, and always try to find the use of something new and exciting like Generative AI and part of our consultation to our client services. And I think Brancher.AI that I created with Johnny over hackathon was a very special moment for us because we wanted something that we can create ourselves to ahead of client asking us. That was back in November, and now we’re getting a surge of requests coming in from client asking for something in the generative AI space. So we always like to be a little bit ahead of curve. Sometimes it’s too early that we don’t get much attention until a little bit later, but we always enjoy that journey of how do we stay fresh, how do we embrace the emerging technologies in a positive direction.

Jeff Dance – 00:05:25: Elisha. You mentioned Brancher.AI. Can you tell the listeners just a little bit about that since that’s a product that you and Johnny co-founded?

Elisha Terada – 00:05:34: Yeah, essentially I saw Johnny building this application stitched together with the Typeform and Zapier, and I think it was Airtable or Google Spreadsheet, where using the GPT, you can create social media posts for our marketing team here at Fresh. I saw that and hey, Johnny, I think we can create application that does all of it where users don’t even have to stitch those solutions together. And over a hackathon, which happened to happen and we just decided to take on a chance. We built a brancher.AI where no coder, who doesn’t know how to code just with a little bit of knowledge of prompting can go and build application based on AI. And the biggest difference that we have is that you can chain AI models together instead of just one thing. And Johnny, do you have anything you want to add to that?

Johnny Rodriguez – 00:06:30: Maybe what I’ll add is to brancher.AI is I think that we intended to help people like us in a similar hey, let’s help this be a product that helps solve some of the challenges and nuances that we deal with. But we’ve ended up finding out now with 40,000 users in just a couple of months that there are so many use-cases that are being leveraged that we just didn’t even fathom, didn’t even think about. And a lot of people finding value with just generative AI to accomplish a lot of the work that they take on or the things that they do in their personal life.

Jeff Dance – 00:07:02: Thanks for the background everyone. It’s great to have you with us and your depth of experience in generative AI. We’ve heard from a lot of people this is the year of AI, specifically the year of generative AI. And we still feel like this is really the early days. The acceleration is just picking up so much. It seems much faster than the dot-com era. If we kind of go back in time just as faster, faster than the voice AI kind of acceleration that we saw when now we have billions of users. It was a matter of years once phones picked up that technology. But it’s also one of the fastest-growing areas for capital investment, growing at a kind of breakneck pace. And we’ve seen big companies like Microsoft, Google, Amazon and others, a lot of the big tech players trying to get on this bandwagon. Given what’s happening in the space, what’s different it seems like this time around is the output from generate AI seems to be a lot more human. And so I think for a long time we’ve been knowing AI is going to be big but didn’t realize how close it was going to be to us. And so there definitely seems to be a shift now in this space. So let’s start with just the 101, kind of the current state and then let’s move into the future. But Elisha, for those that may be newer to this topic, can you help them understand what Generative AI is and how it differs from other types of AI?

Elisha Terada – 00:08:25: Generative AI is a little bit different although it existed for some time and now it’s getting more attention because it generates new content. It could generate a text, image now getting into like a video and audio. So instead of just predicting what it is or classifying what the data is, it can generate new data. And because it is getting so good at generating data that we human can even tell the difference between human output versus like computer output now. It’s getting a lot of the hype today.

Jeff Dance – 00:08:59: It seems like we’ve hit a pace of change where this is not only billions of people are now following this, but it just seems to be growing so fast. Linus, what are your thoughts on that? Why is it now? Why now? Why is generative AI such a big thing right now? You mentioned yourself you’re trying to keep up with it, you’re living and breathing it. But why now?

Linus Ekenstam – 00:09:19: Yeah, it’s difficult even for someone like me. It’s difficult if we think a little bit about it’s not an overnight success. The research for this stuff has been in the making for the past decade. There’s been a lot of strategically positioned dominoes, if you were to put it that way. I think one reason for the current success and the current kind of like hype cycle or beginning of this kind of taking over the world has a bit to do with UX. So if we look at the GPT-3 has been around for quite some time, but it never really took off. It’s been in the playground for OpenAI for almost two years. But then when they released the chat interface, ChatGPT and also like a somewhat updated model that could keep train of thought in a conversation, things started to get spooky and it really took off. And if you look at the image generation and the different models or the different solutions that are out there for image generation, it’s accessible. It’s not bound to, let’s say, a computer hardware. Like, you can have any you can have your smartphone and you can go into Midjourney on Discord and you can start creating art. So the barrier to entry on a lot of these things are almost non-existent. Whereas like other tech breakthroughs, like just look at XR, like VR for example, it’s a massive, massive hurdle to get to adoption because you need to buy new hardware here. It’s just like a website. And these tools are running on any type of low level device with access to the internet. So it’s like democratized and distribution is already there. We have 5 billion people using the internet, so it’s nothing like the dot-com era, right? 1999 looks like nothing compared to this because back then, almost no one used internet and now everyone is on the internet. So I think that’s the contributing factors to why this is taking off so fast and also solves real problems. I’ve never seen a tech getting introduced into the marketplace and adopted so fast and creating so much value and changing so many workflows almost seemingly overnight. So I think that combination is kind of like all of that coming together is what’s creating this almost flood of AI, Generative AI in all facets of the workforce, essentially.

Jeff Dance – 00:11:29: Are we getting drowned by that? Flood or are we surfing the wave essentially? I think that’s what we’re all trying to figure out, right?

Linus Ekenstam – 00:11:37: Yeah, if you’re in the thick of it, yes, it might feel like you’re getting flooded, but if you’re looking at it from a regular internet user’s perspective, they don’t get flooded because they only see the biggest stuff that’s happening. They’re seeing the Bings, they’re seeing the Google workspace, they’re seeing ChatGPT. But if you’re in this space and you kind of see what’s going on under the hood yeah, that’s a lot. And when this stuff hits the marketplace and the winners are going to get siphoned out from whoever is not going to be around, it’s going to get even crazier because then everyday people will have access to these tools too. So I think we’ve seen nothing yet. Like we’re at 1% perhaps.

Jeff Dance – 00:12:12: Thank you for that perspective. It definitely is a race, that’s for sure. And we see this with the tech companies and even people are predicting massive changes to search and Google is saying, hey, we got to catch up, we got to launch something now even though it’s not ready. Johnny, you track technology pretty well. From your perspective, who are the big players in generative AI right now and kind of what’s going on in the marketplace?

Johnny Rodriguez – 00:12:35: Wow, that’s a big question. Yeah, there are definitely some big players in the space today and then there’s new players entering the space. I would say almost daily. Definitely weekly. I mean, I think by the time we actually launch this podcast, there will be a portion of what we say today that will already have changed. It’s moving so quickly. We’re even doing an emerging tech meetup here in Austin, Texas. And a lot of what we shared in our last meeting will have to be updated, and we have to say, oh, by the way, and I think to answer your question Jeff, OpenAI is one of the bigger most well-known companies in the space. Like hey, they’ve now launched X, Y and Z and they’ve launched this iteration of this. And other big tech companies like Microsoft and Google are implementing either their own versions or they’ve accepted the technology and have start to build with it themselves. But I think you’re seeing definitely every major technology company, you’re going to see elements of generative AI, not just in like, hey, here’s a fun way to go generate an image. I think that a lot of times we think that that’s what it might be, but it’s going to be fully integrated to the systems that you use. So yeah, OpenAI has quite a few different platforms under their umbrella that are in the generative AI space. So I think the one that triggered my attention at the very beginning was DALL-E 2., which was a type in some text, generate an image. And I was floored. I was literally like obsessed for 18 hours straight and I generated thousands of images. My first night with access to DALL-E 2 and then I had access to GPT-3. But it wasn’t until, like, Linus mentioned the application of chat that then it triggered for me. This. Oh, wow. I can have conversations with this. To be able to get to the output that I want. I don’t have to think about a blank page. And what do I ask it? I can kind of go through iterations to get to that. So there’s a lot that OpenAI has been able to enable, but yeah, we’re going to see today I would say from the big companies, microsoft, Google, Adobe has recently shared some things. I think we’re going to see it all over and I think that in a lot of cases you will hear companies not leading with it. It’ll just become part of the interface and part of the natural experience that a user has. And it might be more frictionless where you don’t have to think about it in the same way that today you take photos with Google and there’s a lot of AI that’s leveraged, not generative AI, but lots of AI that’s leveraged to improve the image output. And you don’t think AI when you do that or when you see you’re on your TikTok for you page, you’re not thinking, okay, these are images that are going through algorithms and data to kind of give me that. So I think it’s going to be, you’re going to see lots and lots of companies start to put it more in the background. Right now it’s very much leading with that. You’re seeing a lot of features of saying, I think the most common icon right now is a magic wand which represents using AI to generate something new. And you’re seeing that on almost every application notion. Adobe is doing it, we’re seeing it being we’re going to see, we see Google using it. So quite a lot of companies that are leveraging that, but it’s going to be everywhere, all over the kind of digital footprint these major applications.

Linus Ekenstam – 00:15:51: And AI also managed to get like the color purple for some reason.

Jeff Dance – 00:15:54: Yeah, I want to dig into that a little further. I might have to research why, but Linus and Elisha, other thoughts on the marketplace, like what’s going on in the marketplace and kind of the big players in the space.

Linus Ekenstam – 00:16:04: I think what’s interesting now, OpenAI kind of leading the pathway and the Microsoft obviously in bed with them, has gotten everyone to scramble to try to catch up and I think we’re kind of busy looking at what’s happening without looking further out. It’s safe to say that maybe two years from now, three years from now, most of our devices will have edge things running in the edge. So we’ll basically have stuff running on our device. So all these services that are currently out there creating bus, I’m not so sure that it’s interesting in the long run. What’s happening in the marketplace right now. I think that’s my take. I’m excited about what’s going on and I’m excited about companies doing good and trying to help out, getting out this out for more people because obviously that’s what’s needed. But I think it’s interesting to see what’s going to happen relative short term in terms of edge computing. That’s where my headset and this question.

Elisha Terada – 00:16:58: Yeah, from software engineering perspective, because I create applications like Brancher.AI, I think the marketplace, we can also look at it from the engineer side of things. For example, Midjourney. Everyone loves Midjourney. I love Midjourney. I used it to generate image. It is very closed system. You cannot tap into the power of Midjourney as software engineer. So some users on Brancher.AI would ask us, hey, when are you supporting Midjourney? And we have to say, well, they don’t support external developers, so we can’t support Midjourney as official thing. And that is very interesting where AI provides DALL-E with the API, so of course we would integrate with that. So there is like open integratable market that as engineers we can tap into to build more things interesting, accessible. There are also more proprietary things that they may never release. There is also open-source alternatives that people try to come up with to try to compete, maybe not compete to make technologies more available as in the algorithm or the technology. Now, you may not be able to run it because it still cost you so much money to rent GPUs to run it yourself. But there is a side of marketplace that is very interesting monitored. How are they enabling other creators or engineers to build their solutions? Because that also changes the landscape of what to expect in the future.

Jeff Dance – 00:18:34: It definitely seems like the creators and the tech companies are kind of grabbing hold of this new power and they’re kind of each harnessing it in their own capability. And that’s like the building block of all these other use-cases that are to come. Because it still seems to be the wild west. It’s raw and powerful in its use-case, but it still seems to be the wild west for a lot of business applications or workflows. Everyone’s on their own kind of doing their own thing. And so I think there’s the universal benefit to that. It also seems like search engines over the last ten years have gotten more and more ad centric and maybe the content a little bit more SEO manipulated. And it seems like the quality of real human answers that are at least perceived to be more friendly are coming out of Generative AI for questions that you would otherwise ask a search engine. But the results you’re getting are kind of maybe the ads and some of the SEO kind of targeted results. So I’m wondering if that’s also playing into people’s the pent up demand for what maybe these search engines should have been giving us in the past if they’re evolving in a human-friendly way. Johnny, from your perspective, Stanford has said that right on their website, that the generative AI has this capacity to change how we think, create, teach, and also learn and maybe even change our perspective on what’s important to learn. But tell us more about use-cases. What are people doing today with generative AI that’s making it such a big hit? Having people I talked to one of my colleagues, his wife just stumbled on Generative AI. And like you, she was up all night, like, playing with it. Right? But tell us more about like, the use-cases with Generative AI. Specifically, you guys have an application Brancher AI, where you get to see a lot of these use-cases. But tell us more about kind of the categories of use cases, maybe some common categories of use-cases to help people understand this better.

Johnny Rodriguez – 00:20:34: There are definitely a lot of different use-cases out there that we’re seeing across a wide variety of industries. And I think it’s basically, I think generative AI is helping in a lot of industries. They’re helping them go from like zero to one faster and making tasks easier or being able to get started with something. When you partner with the technology, when you partner to have it be something that helps you get to something a little faster. Your mind and your processing and the cognition can be focused on things that might be more creative or can help add upon things, or it just kind of helps you. It’s almost like you’re doing the work of one and a half people or two people in a way. So you see that in education. I mean, I can go across each of these automotive. There’s a lot happening in that space. I know there’s data around CarMax and how they’ve leveraged OpenAI’s technology to summarize thousands of customer views and help shoppers decide what used cars to buy. And they’re able to do that pretty quickly. Or entertainment. Right. I’ve seen this used of Pixar designers and people that create films and story writing and scripts, right. They’re using it to generate new characters or variations of stories or game levels in the entertainment industry. Retail, you mentioned SEO early, the idea of personalization of product recommendation or product description or marketing copy to promote different things there. And we could go on and on on finance and healthcare and so on. But yeah, I think it definitely taps into a lot of different markets and industries today. I think not all of these industries are at the forefront of these emerging technologies, though. And so there’s still a big gap of mass user adoption across all of these different industries, but we’re starting to see them. I think we’re definitely at the precipice of all that.

Jeff Dance – 00:22:23: Got it. Linus, what are your thoughts on kind of some of the common use-cases that we’re seeing today?

Linus Ekenstam – 00:22:29: Yeah, common use-cases. I think depending on if we look at text-based or image-based or video-based, this is everything. Right. But I think Johnny touched a lot on areas where it’s making rounds. Right. But I think one of the most, AHA moment I think for me with LLMs for example, was when it actually completed a task that I had in front of me that I needed help with within my workflow that was unique to me. And I think this is one of the power uses where software today is built for masses. We see software being built for whether it’s a tam that’s big enough, some company or individuals go out and build software for that with Large Language Models and generative AI. I think even the minutest problem that maybe just one or two people might have is something that you can solve with these tools. So in broad strokes, yes, we’re seeing a lot of real really cool use-cases that are this big company is doing X or that big company is doing Y and we can do a lot of really grunt work that was before really hard to do, we can now do with kind of indexing. And it’s interesting, but I think where we look at where the real impact will be is kind of like there’s 100 people in the world with this unique issue and these people can use these tools to get these issues solved because no one will ever build software for them. Like to be frank, no one will ever go out and do that. But then if you look at those 100 people, there are going to be tens of thousands of those instances where there is a small group of people that have a unique issue. So that’s where I think we are seeing leverage and I think that’s where we’re going to see more leverage. And I think also what you built with Brancher for example, is a good example of that. You’re empowering people to build their own little micro tool or their own solution to a problem. So yeah, I think that’s what I got to add to that. And this is where I wrote something the other day where disposable apps is going to be a thing of the future, right? Where an app is built by AI purposefully for your single task that you have at hand that you need solving. And then the app will run in some runtime. And then once you’re done with your task, the app will delete itself and go away. It won’t be in runtime anymore. And I think this might sound science fictions right now, but I think very soon this will be just normal. It’s just like the AI makes you something on the fly. It uses that to solve a problem and then it goes away.

Jeff Dance – 00:24:53: Easy peasy. One point in my career I was writing a book on the different types of smart. It was like you can be creative smart, you can be math smart, you can be street smart, there’s lots of different types of smart. But one of the core definitions that I remember from the outline and the research I was doing like what makes someone smart? Because just being test smart didn’t make you successful in business as an example. But being business smart also didn’t mean you could go solve problems for NASA. And so there’s all these different types of smart I was thinking about and one of the things I remember coming across the definition and it said it was the information you surround yourselves with as you do a task that makes you smart. And I think as we think about generative AI going to this throwaway applications, it’s like if we can constantly give a thinking partner kind of our unstructured ideas or notes or drawings or images, and it can help us. Think it can produce something beautiful or produce the next iteration of something or help synthesize a whole bunch of research around that task. Then there’s something really powerful about that intelligent system that we’re pairing with. And I think if I can summarize kind of what you guys have said, it’s almost like, hey, what use cases? There’s an unlimited number of use cases, right? It’s really kind of almost anywhere. It’s more like hey, there’s an intelligent partner that can augment almost any task and work that we do. Elisha, what are your thoughts on this?

Elisha Terada – 00:26:22: Yeah, I agree and I think how general of a problem that these share today highs can solve I think is also great. I think since 2017 when big companies start to release like a cloud-based AI model training and all these stuff that I would collect images of cats to try to predict the breed of a cat. I also take pictures of sticky notes to try to identify with the object detection. It’s going to be a world where you don’t train things on your own unless it really has to be super niche thing. The generative AIs are generic enough that you can start using it today to generate images, write text and that’s why it’s so applicable to many problem solving today.

Linus Ekenstam – 00:27:14: I think one thing to add here is with all these conversation also going forward in this conversation, AI is the worst it will ever be today, right? It will never get worse than it is currently. So that’s just like food for thoughts when you kind of embrace this and kind of talk through this and we’re already mind blown so just sit with that, right? Is it never going to be as bad as it is today?

Jeff Dance – 00:27:39: I think for a long time we talked about devices that are smart and I think what we meant was that they were connected to the internet and they could send data or receive data but they weren’t truly smart. Anything now. Can be connected to the Internet, like a tree, a plant can have a little receiver, anything. But is it smart? And I think we’re now at that place where it’s like, we kind of understand better what smart is and what it is. I think is it’s closer to us, it’s closer to human beings. It’s actually intelligent, where it can synthesize or give some good output that we would quickly understand and be like, wow, I got something valuable. They did something for me that I didn’t have to do a lot of thinking around. They gave me something that I could use right away. I think that there’s kind of a sea change there where it’s like it’s not necessarily another mind to make decisions for you, but it’s like this intelligent system where you can use your mind even better.

Linus Ekenstam – 00:28:31: Yeah, I think one way to look at this is like, we’ve been in the information age for quite some time. The Internet is basically a large library, and we’re kind of like, surfing the library. We’re querying the library. We’re, like, skilled librarians, and we’re also skilled curators because we need to filter out all the crap and the ads and the spam and the viruses, and along comes generative AI or Large Language Models, which is like some type of intelligence. We don’t know really what type of intelligence it is, but we’re moving away from an information highway to an intelligence highway where we’re just, like, we have access to this synthesized entity that is able to, in natural language, speak back to us. And I think that’s the major shift here, that we’re going away from the library of the Internet to kind of like the new beginning of entities, where information is the core, but intelligence, it’s what’s getting delivered. And I think this is the mind-blowing stuff that’s just like, this is straight out of Sci-fi, and we’re living through it. This is what’s so powerful, with this shift.

Jeff Dance – 00:29:38: In the last six months, we’re now in the Sci-fi world. It’s like no, I feel it. I feel it. I think we all feel it. And there’s an information hierarchy or a data hierarchy goes there’s. Data, information, knowledge, wisdom. The DIKW Framework. And I think what we’ve entered into is more of the intelligent systems that we’re feeding information to are now giving us more like the knowledge and wisdom side that we’ve been experiencing the data and information, but we’re getting into kind of the better use of information that kind of precedes maybe better knowledge and better wisdom. What about limitations? Johnny or Elisha? Do you have any thoughts on sort of the limitations of generative AI? We’re seeing the use cases proliferate. Everyone’s talking about it from my young kids at school who are either getting penalized or rewarded for using it, to the workplace where people are thinking about using it. What about the limitations?

Elisha Terada – 00:30:34: Yeah, I’ll speak from the resource limitation perspective and not maybe usage limitation that maybe Johnny can talk about. Resource wise, it’s still very expensive. Like you still have to lease those GPUs. For example, Google. From Google, you can lease those GPUs. I think if you were to buy it, it might be like $20,000 plus. So you’d still be paying. Like last time I checked, if we were to run our own models, I think it was a couple of month minimum to have it up and running. And that’s only one GPU that can take one request at a time. So if we have hundred users requesting to run our own like a model, then you need to think about how do we load, balance or queue things up? And if we don’t want to do that, we need to keep leasing more and more of these. And with a lot of funding that OpenAI received, they can easily do that and try to offset the cost where Microsoft can fund them, for example. And Microsoft can generate income by selling their ads in a new way that hasn’t been done before to try to compete with the Google. But at individual level, like me, I’m running those new cool diffusion models on my PC with NVIDIA Graphics Card. So that’s cool, but if I were to turn it into a service where everyone can use it, it’s still difficult. So you see a lot of forums where engineers or creators would share like, hey, I created this cool model. What you do is you’re downloading the model to your computer and use it for your personal fun, but still not to the level where everyone can deploy these things up. So there’s a lot of creative stuff out there and a lot of companies try to support that effort, but a lot of cool stuff is still like hidden under the gate of the cost and availability of resources.

Jeff Dance – 00:32:39: Thank you for that kind of technical perspective. And I think that helps us understand why it costs money to actually ask for some, get some answers, get some output. And from whether that’s image or text or one thing to another, I think that helps us better understand the perspective on cost, which is unique. It doesn’t cost to do a search engine query, but for a lot of these generative AI models, you’re paying something, right? You have to have an account, you got to pay something.

Elisha Terada – 00:33:08: Yeah, I wanted to add that with the bing you’re limited to how many queries you can run because of all these costs that they’re trying to control. That or that you have to pay for a ChatGPT Pro if you want to keep querying despite heavy load so that you can still get the results while the free users are blocked from using the ChatGPT.

Jeff Dance – 00:33:32: Johnny, what are your thoughts on sort of just some of the limitations on use in the everyday?

Johnny Rodriguez – 00:33:38: So I would say Generative AI today, there’s so many things that it does super well. And I think those are the big AHA moments we’ve been having the last few weeks or months. A lot having to do with what OpenAI is releasing and then seeing it kind of get included in a lot of the products we use from a day-to-day basis. But there are technical limitations that we’re still experiencing now. A lot of these might change by the time this podcast gets released. Some have already changed in the last two weeks. If you think about from an image standpoint and Linus, you could probably confirm a lot of this, but thinking of like, Mid Journey V4 and the thought of, like, I can’t really process hands very well. I generate something really cool and it’s got six fingers or twelve fingers or like, a mesh of fingers. And unless you look really closely, you don’t see that. But there was little details as somebody that would go and generate something where you would say, wow, this is incredible, but it’s not quite there yet. Right? That’s changed a lot in V5, version five of Midjourney where they’re able to fix the fingers right and you’re seeing better. Teeth actually look normal, fingers look normal. And there’s more and more things that you can kind of track almost on a day-to-day basis, definitely on a week-to-week basis on how image generation is improving. And we’re going to see DALL-E 3 come out. They have a currently have an experimentation version. We’re going to see that get better, but there’s limitations on what it can do. Definitely. I think I would put it in from an LLM standpoint, right, maybe going away from images a little bit, something like ChatGPT or GPT-4. Sometimes a lot of the outputs can be sometimes it’s called hallucinating facts. So basically it’s a reasoning error, right? It’s essentially generating a response that is actually factually incorrect or it makes an inference that just is not supported by what you typed in, right? And we’ve seen that sometimes happen where it’s like 99% of the time I’m getting facts, but then sometimes it generates a stat that at the time when it wasn’t referencing things, right, being is able to show sources and say, hey, this is where I got the data. Or if you’re on GPT-4 or ChatGPT plugins, which is something newer, there’s even a little bit of data around that of where it goes and what it clicks and things like that. So definitely on the reliability of outputs, I would say even a few weeks ago I would have said limited knowledge on recent events. So if you go run something and you say who won the game last night, this NBA game, it won’t know that. It didn’t know that with Bing connecting ChatGPT or GPT-4 to Bing, it now has access to the internet, right? And then ChatGPT has plugins which is something they recently announced. If you haven’t seen that, that’s another one of those big moments where I was like, oh my goodness, this is crazy. But the idea that it can actually connect to the Internet now, so it actually has browsing capabilities, so then now it actually can connect and answer that. So again, these types of things that I’m listing could be addressed with technology or through user experience. We’re also seeing a lot of bias in the outputs today. So that would be if the training data, which is a lot of just things around the Internet, right, depending on where the data was trained, it’s a reflection of what we type and what we upload to the Internet. But a lot of it can be bias and that could be from a gender or racial bias and it’s sometimes reflected in the outputs. Maybe the last thing I’ll mention, just not to go too long on this, is I think another limitation has to do with the application of Generative AI for people’s use-cases. So I know there’s a quote, I wrote it down so I could reference it later. That comes from Bill Gates that I really like. And he says, the first rule of technology used in a business is that automation applied to an efficient operation will magnify the efficiency. The second is that the automation applied to an inefficient operation will magnify the inefficiency. And so the code is kind of highlighting the importance of understanding how effectively to use the technology in order to reap its benefits. And if you apply GPT-4 to an efficient operation or ChatGPT or Midjourney, it can really magnify the efficiency and can bring immense benefits. But on the flip side of that is that if you’re doing it for something that’s inefficient or if you don’t really know how to use it, it could just create more noise and more confusion and it could really lead to negative consequences. And so I think we’re still discovering ways to improve that today, where to apply it. So the education gap is still there and I think there’s a lot of work to be done there in the space.

Jeff Dance – 00:38:00: I’d like to talk a little bit more about ethics as we think about limitations, but let’s first talk about the future and where things are going, and let’s step back to kind of some of the ethics, because that’s on a lot of our minds as well as we think about how fast this is moving, given that technology typically has a little bit of a life of its own. And this is actually a great example of technology having a life of its own, especially when there’s an arms race to seeing who can be the leader in the space. But Johnny, you mentioned Bill Gates. Another thing he said was most people overestimate what technology can do or what it can achieve in a year. Hence, like Gartner’s Hype Cycle but they also underestimate what can be achieved in ten years. We’re already impressed. And Linus, you mentioned we’re at like 1%. This is a small baby, so what do we think the future will look like? What will things be like ten years from now? What could change? Linus, what are your thoughts and specifically around the technology or things that this technology could change.

Linus Ekenstam – 00:39:01: If we just also again, step back a little bit. Historically, if we look 15 years back, basically the iPhone came out, right? And if you then look at what other technologies weren’t around that you’re using day-to-day today, 15 years ago. And if you just ransack yourself and look at that, you go like, maybe there’s one or two things that you’re using daily. That was around 15 years ago, if even that. The Internet is one and a phone is another one. But it wasn’t smart. The rest of the things that you’re using daily, like the Chrome browser, the camera, whatever you’re up to, your identification on your phone, banking, all this stuff didn’t exist. And we take it for granted. So we’re a technologist. We know how this stuff works. Change itself is exponential, which is something that most people have no idea what that means. Even as someone that’s in this field, it’s really hard to comprehend that we’ve been through like 30 cycles of Moore’s Law since the invention of the microchip, or close to, and we’re now kind of entering the second half of the chessboard. So every doubling now, every kind of increase that we’re seeing is kind of like a doubling of everything up until this point, which is insane. So when we’re looking at AI and it could go, it could completely stale. We don’t know. It could go for another year and then it just stops. Innovation stops. I doubt it, to be honest. So looking ten years into the future at this point, it’s almost impossible. I think the change that we’re going to see are going to be so in magnitude, quite large, that if I had the looking glass and go ten years from now, I’d say 50% of all current knowledge workers have either transformed or kind of gotten into a different role. Maybe they’re not lost. Like maybe we don’t lose 50% of the workforce, but they’re definitely going to transform. If we look at the convergence of all these technologies, we’re looking at where we are with LLMs, generative AI, with OCR, it’s like object recognition. We look at self-driving from Tesla, we look at Optimus from Tesla, and we haven’t even talked about that. But all of these things are going to hit an inflection point somewhere in the near-term future where everything is going to get enabled at the same time. And this is something that’s really hard for everyday folks to comprehend and what that means, right? We get a self-driving vehicle. That’s a car. It means that pretty much anything can get self-driving. We get a self-driving vehicle. We get a bipedal robot that can do human tasks that it’s going to be able to see and operate in the real world that’s just tied together. We have these LLMs that are like it’s a transformer. It predicts the next character. It can speak back to us. We don’t really know how far the transformer will get us in terms of the AI technology, that’s your derivative of the LLM. And the next thing after that, we don’t know where that is either. But it’s like we have intelligence. On one hand, we have robotics and the kind of evolution of all of that. And on the other hand, and we have all the research fields that are currently been under development for the past 10, 15 years that hasn’t really got in the limelight yet. So we can assume that sometime within the next, I’d say five to ten years, that the convergence of these things will happen. And I’m not saying we will get AGI. That is not what I mean when I say convergence of these technologies, but I think the impact of these technologies will be so big that it’s really hard to comprehend with what we know today, how it would change the world that we live in. And it’s up to us.

Jeff Dance – 00:42:29: I want to ask another question because technology moves so fast. This is no exception. What would we say to people that are scared? Because there’s a lot of people that you talk about. People were scared of computers back in the day and it changed where it changed us, but mostly for the better. What would we say to people that are scared of generative AI?

Linus Ekenstam – 00:42:47: Yeah, I’m dealing with this a lot because of my given optimism. I come at this. So my mission is simple. I see a need for educating people en masse, fast. Because the people that knows these softwares, let’s put it this way, the people that has access to these tools, that have learned to use these tools, they are not running ahead of the people that are not using these tools at like a one x speed. They are running away from the other cohort at a 10x or a 20 x speed. And I think the problem that we’re looking at is like if the time divide between the people that are getting into these tools and the people that are not, if that time divide gets too long or too dragged out, we’re going to see larger societal issues. Then if we can get as many people as possible, even though it’s painful, even though it’s scary, if we can get as many people as possible onto the train at the same time and just have continuous trains coming into the station filling up with people, we’re going to win. So I think the approach people should have, if they’re feeling afraid, they should try to take on the lens of a kid in the playground. Don’t look at these systems or tools as adversaries or like enemies. They should look at them as friends, as colleagues, as something that could augment their skills, something that is playful. They shouldn’t be afraid of doing wrong. I think basically all the stuff they should have done with a computer early on applies here as well. The problem this time around is, again, speed, breakneck speed. The fact that 5 billion people are on the internet, I couldn’t stress this more. Right. We need to make sure that this is something that benefits all because if people are left behind this time around, it’s going to be really hard for them to catch up. I think we’re seeing in the fringes now, the people are getting hurt the most are people that are in universe, like starting their education early on and they’re afraid because here is GPT-4 scoring 90 percentile on the Bar exam and I’m going to be a lawyer. I got five years in front of me before I even get out of school. And then you have the person that’s been 30 years or 35 years in the industry, whatever industry it might be, and it has like, coworkers that are 10, 15 years younger running eights around them because they have access to these new tools and they just spit out content. So we need to be cautiously optimistic and we need to have a playful mindset. I think that’s my perspective on how you should approach this technology to be part of it.

Elisha Terada – 00:45:10: Yeah. Johnny and I are also optimistic as people pushing for emergent tech and we run into these questions as well, even within our company. And I think the way I probably try to encourage people to think is, it is going to probably let you do more of the things that maybe you wanted to do more of. Famous saying with all the SaaS application telling you, hey, you can focus on what you really love to focus on instead of worrying about the mundane work. Right? Like we like to use the Mint.com or Expensify to just categorize our expense and just use software to send our tax to file tax or send it to tax accountant instead of having a paper of transactions that we have to record and then send it through manual papers. Maybe it helps. Even though you might replace some of your work, it might replace it in a good way. Maybe having open-minded way to what else can I do with the available time now that I have might be one of the approach. Johnny, what do you think?

Johnny Rodriguez – 00:46:18: Yeah, I love that. Yeah. No, I think that’s a good point. I think those are all things that I think impact. I think as we think about the type of work we take on now and how it might, like I said, help us go from zero to one in some of these areas, it becomes really impactful really quickly and you reduce the iterative cycles that you kind of go through. But I think where my thinking goes as it relates to addressing people that are scared of generative AI, they’re like, well, this is rolling fast. Definitely we do hear that a lot from lots of different people. Even just mentioning the platform that we build with Brancher.AI, I’ve had some very drastic negative responses to participating in that movement. I think I’d invite people to I think it’s important to hear those concerns. So I would say to those that are scared about kind of where it’s going, I think it’s important to lend an ear to those people that have concerns and actively listen and then also see what we can do to apply to because a lot of that feedback is actually pretty important, I think. And that might help the way we build our products. It’s part of any product that we build today. Coming from that design background, it’s like it’s important to have that user feedback in the process. I think other things I’d add is just the fact that there’s a lot of education that people need to do to see how it can help them. So just providing very accurate and accessible, relevant information. I think things we can do as well is share the success stories. I think there’s a lot in the news that focus on the negative part of it. And I think we could be advocates for sharing success stories, highlight positive examples of these real-world applications and how it’s helping in healthcare or education and just being, if you’re seeing those things happen, sharing that with the world, maybe a couple more that come to mind. I know Linus mentioned one about Just that, like human-AI collaboration, I think that we could really reinforce the idea that AI is meant to complement human skills and expertise rather than replacing them and explaining that human-AI collaboration can really lead to effective problem solving and it really can increase the productivity. So, yeah, maybe the last one is just like addressing the ethical concerns and being really transparent and addressing the potential for misuse. Acknowledging like any technology, it can be misused. Same thing happened with the Internet 95 just it could be misused. And here are some steps that can be taken to prevent that malicious use or to build security measures or collaborate with policymakers, involve the public. There’s a lot, I think, that we could start to tackle to help reduce that fear. I think people become less scared when they’re able to understand it better as well. I think our minds fill in the gap sometimes.

Linus Ekenstam – 00:49:03: That’s so important, Johnny. The people are less scared when they understand. And I think most people are afraid now because they don’t understand. That’s it. I think that’s the most yeah, that’s it.

Jeff Dance – 00:49:16: That’s a great plug for this podcast episode. But I think whether we look at the calculator, the computer companies like Uber, these things roll forward and then people are on the sidelines historically going they are scared and to your point, they maybe don’t understand, but it’s going to come. It’s going to come. And so I think this call to play with it, dive in, understand it, test it, seems to be kind of a great takeaway for those that want to learn more. I want to finish with just this last question and I appreciate all the depth of insight so far. Where would you send folks to learn more? I know there’s an element of testing things, but what would be some things you would say? Now, Linus, I’m assuming your newsletter is one place people can go.

Linus Ekenstam – 00:50:10: Yeah, shameless plan. No, but I do what I can. I’m on Twitter, right? @LinusEkenstam and I do write my newsletter as well. Where is education is like primarily what I focus on. But I would encourage, if you’re interested, go on YouTube, search, find some videos of people explaining it thoroughly and slowly go to the tools themselves. Go use the free version of ChatGPT if you haven’t tried it out and just go play with it. I think like anything like knowledge seeking is the forefront. And actually, one of the funniest use-cases for kind of learning with these tools is like to go to ChatGPT and ask it to teach you something about something that you don’t know about and ask it questions about what should I focus on if I want to learn X? And you’d be surprised because it’s like having a conversation with a tutor, for example, and it might be the ha moment that you’re looking for. So actually the best place to go to learn about these things is to go to the tools themselves and ask them, what do I need to know about what do I need to know to learn how to use ChatGPT, Meta.

Jeff Dance – 00:51:18: Johnny or Elisha, any other thoughts on favorite places where you guys have been learning? Kind of staying abreast of what’s happening?

Elisha Terada – 00:51:24: I think echoing the Linus mentioning about using the tool to learn, I think we’ve seen success, I’ve seen witness some success of people turning around their understanding of the tool just by actually using it. Even the knowledge workers where they’re afraid that it might replace them, including the artists. All of my people that I personally know, after they use a tool, they learn quickly that this can be used to their advantage. Like, I have an artist friend who now use Generative AI to quickly come up with the concept art instead of spending hours drawing something and send to the boss, hey, what do you think about this? And boss says, oh, I don’t like it. Come back next day after spending eight more hours. Well, instead they can just generate hundreds of image show to their boss and like, oh, yeah, we like this one. Okay, now we are past, uncertain, ambiguous. What is my boss going to like it too? Like, okay, yeah, we’re going with this concept now. Let’s put the 100% custom effort into it. So I think people will find it really quick that once you use a tool, you’ll find ways to magnify your work and do more of what you really want to do. Johnny?

Johnny Rodriguez – 00:52:41: Yeah. I really like both of your suggestions. I don’t know how much I can add to those, but I would say, like, for me, the way I try to educate myself is I like to subscribe to a lot of newsletters like Linus says or like others. When we participated in Hackathon, it was with Ben Spitz and there’s a few other big fast growing newsletters and their full intent is to educate or to just let you know what’s coming out. So instead of me going and digging through Reddit and through Twitter and through a lot of these, they’ll bring you a curated list of apps that might be powered by AI or new advancements. And I’ll just keep myself up to date by doing that. So I think just a combination of doing your own research, following people that are doing the work to help out with the education, like Linus and others, and then newsletters, I think, would be the three recommendations I’d give.

Jeff Dance – 00:53:26: Thanks for the depth of insight. Let me know when you guys each clone yourself your own LLM so I can chat more often and get more help. But I’m looking forward to the future and I appreciated your guy’s perspective on how we shape it and how we can kind of make the most of our experiences as humans kind of living in it and really a fast paced time right now that everyone really needs to understand because it’s going to impact their future. But I appreciate you guys being on today and look forward to continuing to learn from you.

Elisha Terada – 00:53:57: Thank you so much.

Johnny Rodriguez – 00:53:58: Yeah, I appreciate it.

Linus Ekenstam – 00:53:59: Thank you so much for having me.

Jeff Dance – 00:54:02: The Future Of podcast, is brought to you by Fresh Consulting. To find out more about how we pair design and technology together to shape the future, visit us @freshconsulting.com. Make sure to search for The Future Of, in Apple podcast spotify, Google Podcast, or anywhere else podcasts are found. Make sure to click subscribe so you don’t miss any of our future episodes. And on behalf of our team here at Fresh, thank you for listening.