Podcast

The Future of Spatial Computing

How we interact with technology has changed significantly over the last several years. Immersive technology has flourished since the COVID-19 pandemic as more workplace sectors opt for safer working, learning, and development practices.

It is possible to improve how computers, people, things, and their environments interact with one another by using spatial computing. Spatial computing offers applications that go beyond retail and entertainment and aim to give the virtual world a more humane feel. With the aid of this technology, the digital 3D depiction of the virtual world can be created without the inconvenience of bulky equipment.

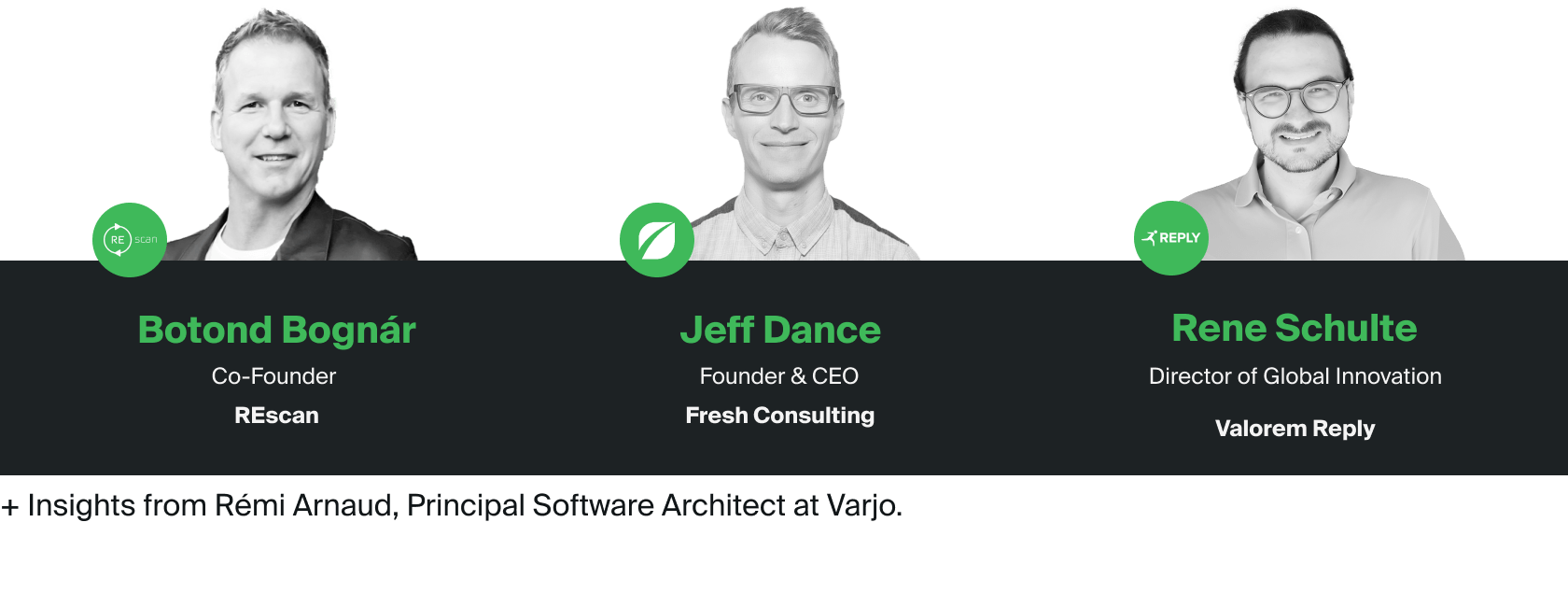

To understand the future of spatial computing in this episode of “The Future Of,” Jeff is joined by Rene Schulte, Lead of the Spatial Computing Community of Practice at Reply and Botond Bognar, Co-founder ar REscan with additional insights from Remi Arnaud, Principal Software Architect at Varjo.

Botond Bognar: I see a great chance that technology will be more humane. Let’s say, I don’t need to have a screen just to interact with something or someone. I don’t need to carry a heavy device. I don’t need to have a desktop computer to do something, which I can only do on a desktop computer. I would say that the promise of spatial computing is to, at last, make the digital world and everything around it more humane, human-centric, and compatible with us.Jeff Dance: Welcome to The Future Of, a podcast by Fresh Consulting, where we discuss and learn about the future of different industries, markets, and technology verticals. Together we’ll chat with leaders and experts in the field and discuss how we can shape the future human experience. I’m your host, Jeff Dance.

***

Jeff: In this episode of The Future Of, we’re joined by René Schulte and Botond Bognar to explore the future of spatial computing. Welcome. It’s a pleasure to have you with me on this episode, focused on this most fascinating topic.

René Schulte: Thank you for having us.

Botond: Yes, thanks for having us.

Jeff: Thanks, René. Thanks, Botond. Really excited to have two future-thinking, I would say, researchers, scientists, evangelists that have been really deep in this space. If we could start with some backgrounds, René, if we can start with you and then Botond, can you tell the listeners more about yourself?

René: Absolutely. My name is René. I’m Director of Global Innovation at Valorem Reply where I’m leading R&D for emerging technologies, like, of course, spatial computing, but also quantum computing, AI, with a focus on computer vision, and a couple of other things. We also have been one of the very few companies that have been working with the Microsoft HoloLens since 2015, before it was even public. We developed some really innovative, mixed reality solutions since then.

Additionally, I’m also leading The Spatial Computing Community of Practice for the whole Reply group, where we have a group of people that all work together on innovative stuff, regards to virtual reality, mixed reality, augmented reality, but also things like point cloud processing, LiDAR scanning, everything that is spatially computing related. We do a lot of innovative stuff in that space. I also have my own video podcast, two of them actually now. The first one is QuBites, which is bite-sized pieces of quantum computing.

In short episodes, like 20 minutes or so, we break down these complex topics of quantum computing and want to make those approachable. For each episode, I invite different expert guests and typically I ask three questions about a certain topic, and then we have a conversation about it. At the same, we just launched for the metaverse with the new show called Meta Minutes. There, we talk about the metaverse. In fact, some of the episodes are not just video recordings, but in fact, we record them inside of metaverse platforms like Meta’s workrooms, or a few others as well.

Once we’re done with this podcast, you’re listening to The Future Of, you might want to check out my podcast as well about Meta Minutes, for example.

Botond: Hi, my name is Botond. Most recently, I co-founded two startups in the space, but before that, in my previous life, I was working with architects to create spaces, be it large or very small. Hence the, I would say affection with spaces itself, but when it comes to digital, these two startups, REscan, which is about to digitize indoor and pedestrian spaces from a human perspective, and Pillantas, which is creating a user interface with using the eyes alone. While the former company works with Stanford Research Institute, the one, Pillantas, works with NASA researchers.

Jeff: It’s impressive. Thank you. René, I also noticed that you’ve been invited more than a dozen times to speak on a topic at different conferences, even on TV. It’s great to have you as an expert here. Also to see that you were honored at Microsoft for many awards in this space, mixed reality, quantum computing, it’s impressive looking at both of your backgrounds. Botond, working with Stanford, that’s a notable name, working with NASA. Obviously, these spaces are on the frontier, and I’m impressed looking through your background. Thanks again for being with us.

If we can start with some of the basics, I just want to start with today, help the listeners understand this space since it’s a little bit abstract, and it involves lots of things coming together. Then let’s talk about the future since that’s so exciting as we think about the impact spatial computing can have. If we can start with Botond, what is spatial computing more or less? How does it work? I know It’s kind of an abstract term.

Botond: Actually, this is a very new term, it’s recently been coined. Then I’m going to pull out a quote by actually one of the MIT students coined in 2003, Simon Greenwold, and he says, “Human interaction with a machine in which the machine retains and manipulates references in real objects in spaces.” More plainly put, I would say anything non-human and a machine interacting with spaces as we humans would interact, that is to navigate and understand the context. That would be spatial computing. It, of course, maintains a lot of hardware, a lot of software. I’m most excited about the content itself.

Jeff: René, for you, how do you see the space of spatial computing?

René: What Botond just said is perfectly right, but sometimes in my talks, I try to describe it with the past a little bit. Let me expand that for a moment. Basically, a lot of us got personal computers and personal computing became a thing in the 1990s or so. A lot of people had their first computers, and it became really exciting once we became connected to each other through the internet. We could do knowledge sharing and all of this stuff. Then, mobile computing came along after personal computing, especially when smartphones came out, and in particular, a key moment was, of course, when the first iPhone came out in 2007, with multi-touch input.

Now we have computers in our pockets that are more powerful than the personal computers we had. We come from personal computing over mobile computing now into the so-called spatial computing age, where we can provide even more context than mobile computing provides us. We have mobile computing devices that have GPS sensors, for example. They can provide us some contextual information while we are on the move.

Now, think about, with spatial computing, we’re getting intelligent edge devices. Devices that have mainly driven by AI, and a lot of that is driven by computer vision, that can analyze our surroundings in much more detail, and so they can provide us even more contextual information. This is the key about spatial computing, like, from a technology perspective, and it could be done with different device categories. You might say it could be mixed reality with head-mounted devices, or it could be augmented reality simply on your mobile phone and things like this. All of these devices have, of course, different capabilities, but they have one thing in common today.

With ARKit and ARCore on mobile phone platforms, they can sense the world around us to a certain degree. Of course, if you have a device like a Magic Leap or HoloLens with even more sophisticated sensors, they can sense it to a higher degree, but this also includes LiDAR sensors like laser scanning, all of this, basically taking our surrounding and providing contextual information to the user and this, with a much higher precision and much more meaningful information.

Jeff: As we think about this being really a confluence, what are some analogies or maybe a lot of people have seen movies, what are some analogous movies, like scenes or things that you guys can think about that can help people connect with what we’re talking about? Maybe it could be a couple of examples.

Botond: Actually, a movie would be a hard one because Ready Player One is very much VR-oriented direction, but I would argue that, let’s say, in fact, a book comes to my mind written by a Finnish sci-fi writer called Hannu Rajaniemi, The Quantum Thief, where there is a planet, Mars, there, a lot of entities are existing. Then you would have to put on, if you’re a visitor, a device onto your head so that you can see them. The whole point is that these entities are totally spatially aware. They are just you and me, they even have the intellectual capability, so it’s another world within the world. I would say it’s another layer to our existing world where the entities appearing in it are very real, not only for the user but for their effects on the user.

Of course, you can talk about robots, so they don’t have to be holograms or anything. You would need to have a device for a human. The human or even a humanoid robot would be spacial computing at its very best because it have to navigate between doors. It has to go through a door, has to understand not only the spatial characteristics, spastic content, and the context as well.

René: Maybe Minority Report, to some degree. If you know that movie, it has some of that references, but also, think about actually any movie or sci-fi where you see a lot of contextual information popping up. For example, people walk down the street, you have contextual digital information that shows them relevant information. Not just billboards that are bombarding them. We have seen a lot of dystopian things in that space. There was a lot of interesting sci-fi experiments where I forgot the name, but you probably have seen it, but bombarded with ads, all virtual stuff all the time, or you go shopping and then you get bombarded with virtual stuff.

No one wants this, to be clear, but think just about, you have more contextual information, digital information that can provide you more meaningful things in the real world, but it has persisted at this stage. This is also an important part about it is, if you’re thinking about augmented reality, the objects you see augmented on top of the real world, they will stay there where they are. They’re not going to move when you move your device. They will stay where they are, and this is the most important part about it. Persistence and very good precision so that we can enable these experiences.

Jeff: I think, Botond, you’re actually researching the interaction with the eyes, right, for new experiences? What are all the ways that we can interact with the future of spatial computing?

Botond: There are two ways to look at it. Is the content physical or embodied, or I would say, the device or the computer is it physical or digital? If it’s physical, then you can go all the way to the humanoid robot where it has to be just as a human. Then actually, as a reference movie, would be very much so. Either the Terminator movies or anything because they are spatial computing species, if you will, or autonomous cars. That has to be very human compatible so that it understands your hand gestures, your body movement, everything.

If it’s digital and if it’s a human using it, I would argue, and hence my direction of UI research, it has to have at least one component, which is fully hands-free so that you can interact with the content, just optimally with your brain waves. Having said that, we are not there yet. The future will probably be that. I would believe that eyes is a very good in-between modus operandi to actually interact with, but there are many other ways. Then I would pass it to René because I know that he also has a couple of great ideas.

René: Eye tracking and eye interaction is truly a very important research ball. We see it already in certain devices. If you look at the Quest 2, they don’t have eye tracking in Quest 2, but they might be getting at some point, or if you look at the HoloLens 2, they have eye tracking basically in there, which is very important. I can just roll my eyes and look at things. Super important input mechanism, but also your hands are important too. Like hand tracking, you have that, for example, with the Quest 2 in a very good way, or on a consumer device, which was just science fiction like five years ago, or you had it in a device like a HoloLens, which costs 10 times more, you know what I mean?

We’re getting more that these devices get cheaper by still having these so-called natural use interfaces like hand, eyes. Your voice is also very important, especially when we’re talking about AI and conversational AI models, where you can have a real conversation. Not like some of our voice assistants we have today that say, “Oh, they’re super intelligent,” but actually they’re not that intelligent. I’m talking about real modern transformer models, like really good AI voice assistants. This is an important part. Also, not just for the input, but we also should think about how you can feel more immersion in these solutions.

Then if we’re talking about virtual reality, another interesting part about it is, for example, haptic feedback. That you can feel virtual objects, you can touch virtual objects, which is impossible, of course, but there are devices. You can put on gloves, but there’s even more interesting devices that use ultrasound waves to basically emit something in front of you.

You can feel a sphere or whatever object you have in front of you, you can feel it. I also have been talking with companies that produce virtual smell, scent. There’s a cartridge you can attach to your mirror device, and then you can smell virtual objects.

For example, I have a cup of coffee. I’m holding up my hand. I don’t smell it. If I’m holding it below my nose, I smell coffee, virtual reality coffee, if you will. If I hold it back, I don’t smell it. We have a lot of senses as humans, and the more we can support these senses, the more immersive the solution will be. I think a lot of stuff will be happening in the next couple of years in that regard.

***

Jeff: In addition to the conversation we had with our guests on today’s episode, we asked another expert to provide their insights on the future.

***

Remi Arnaud: Hello, my name is Remi Arnaud. I’m French but have been living in California since the late ’90s. I’ve been working in the field of real-time 3D for my entire career, starting with the work I did for my PhD in Paris, and studying parallel algorithms in the context of replacing dedicated hardware with imaginary processing. I’m currently working as a principal software architect at Varjo. Varjo is a Finnish startup that has been building the world’s most advanced virtual and mixed reality headset available today.

How can special conveying make interaction more natural?

Obviously, the devices and technology you currently have to experience spatial company are not yet convenient for mass adoption. The head-mounted displays are either bulky and heavy, or we have not enough performance to represent really here in a meaningful way. Mainly now it’s, the smaller or lighter the devices are, the less performance we have, and therefore, limiting their usefulness. On the other hand, devices like the Varjo XR-3 are very powerful. It can be used in the most advanced visual companies today, but they require a very powerful and expensive PC to run the application and drive the mounted display.

There is another technology already widespread today that will soon help to overcome this. It will be built on infinite computing available in what we call the Varjo Reality Cloud. Varjo has a service that essentially in it both perfect visual fidelity and high performance for mobile headset, as well as phones, laptops, tablets, or any other computing devices. Using Reality Compute, anybody can stream a human high resolution, wide field of view image in single megabytes per second to any device.

Everyone will be able to use Varjo Reality Cloud through 5G technology and run existing and future applications in premium quality. Varjo Reality Cloud will be the bridge between the real and the virtual. We will allow you to recreate your surroundings and share them, transcending human communication beyond the physical boundaries. Headsets can be light and untethered, and still powered by the infinite computer power, making the interaction more natural.

***

Jeff: One of the projects that we’re working on at Fresh was using hand gestures for navigation, using your voice for navigation, using your face for authentication. Thinking about the confluence, we call this project Telemus. It’s on our website right now. The notion was if you’re on the go with your mobile phone, that that could be efficient. If you’re at work on a computer, that might be efficient, but there’s all these in-between states, where you might still have a interaction with information, maybe in the car, and maybe on an elevator, maybe as you’re out in nature, as you’re planning with a team where it doesn’t make sense to be so heavy on your computer.

Having these additional ways to pull information and see information becomes really exciting. This is something we were working on a few years ago, but the space has evolved so much in the last few years. I was curious to ask you two before we talk deeper about the future and what could be, how is spatial computing used today?

René: Especially in the industry, like, look at manufacturing and a couple of other industries, we deployed applications for these. I cannot talk too much because of NDA reasons, but there’s a lot of interesting things you can do there. One example is as simple as remote assistance these days. You have an expert, a domain expert that is not on-site. For example, you have a complex machine that needs to be repaired, they get on a phone call typically. Now if you have devices like a HoloLens, you put this on, and you have a front-facing camera and can stream that directly from the first person view, just to the domain expert that could be anywhere in the world.

This is providing a lot of return on investments for clients, especially these days when travel is being reduced in the last couple of years with COVID, and so on. These have gained a lot of momentum, in fact, but there’s also, of course, other industries. We see a lot of training applications, a lot of where you can, in fact, provide contextual information to the user. For example, you can train them on the actual machine or on the actual object. You can augment it with virtual items that show you the training steps, for example.

Not just 2D, like on the screen, but actually three-dimensional, as 3D objects that can blend in and can show you some much more immersive training solutions that people also remember much more. For VR trainings, for example, we’re also enhancing that with haptic feedback, like I mentioned initially. Like full body suit haptic feedback, but also for your hands. For example, if you’re doing training, and you have to navigate in a virtual room, you’re going to make sure that you don’t bounce into objects. If you’re wearing a haptic bodysuit, you will actually feel it on your arm, for example, if you run into something.

Increasing immersion is very, very useful for these kinds of use cases. I could talk about many more use cases, but definitely, and especially in the professional industry where you see a return of investment. Another maybe last quick example is, since we talked about NASA already a little bit, for the Orion space capsule, the manufacturing, I think it’s done by Lockheed Martin also. Anyhow, there is a case where they’re using the HoloLens for quality control, and they have to check all the bolts on the space capsule, if they’re manufactured correctly.

What they do is, now with the HoloLens, they put this on, and they see an overlay right in front of them. They save I think, 80% of the time. This is ridiculous, they reduce a full shift to 45 minutes in a test. This can scale quite a bit in some industries. There’s a lot of return of investment already. Of course, in the consumer market we’ll see some things like certain consumer applications that people are using, but I think the real return of investment right now is definitely in the enterprise world.

Botond: Yes. In a broad sense, let’s say the consumer, there are a couple of glasses already, which have cameras in it. Of course, there was Snap with two or three iterations. Then most recently, I think, Meta and then obviously the company, they released where you can roll out with your glasses, and of course, there was Bose. I think it was Ray-Ban, that was Ray-Ban. Those are spatial computing devices because they understand, not to the precise place where they are, but actually in, let’s say, where they are in the world, for instance. Then, by the way, I think 1 billion users just started to use or are using AR on their phone, which is, then I would say a pretty neat number although these are typically nice to have or fun experiences. Not necessarily they must-have just yet.

I think enterprise right now, as René pointed out, is one of the great users where money is involved. There is a, I would say, straight-to-the-point optimization or something very, very useful that hasn’t been done before, like quality control, or I would say training will be huge. There will be some of those points where you can train an entire new fleet of workers without being in the space just now. Then when they show up on Day 1, they know where they are, what to do all of a sudden, or they go to a big oil refinery or a rig or something.

Enterprise is clearly the very first one of using it. Having said that, this is minuscule. This is, I would say, a grain on the beach. It’s just unimaginably small compared to what it is actually. We are not even in the early, early––it’s like before the early, what it is about to become.

Jeff: Often with great technology innovation, it comes on the heels of problems, serious problems. Botond, I noticed that you have a special emphasis in some of your research on socio technical challenges. I’m curious, what do you guys see as some of the big problems or challenges that this will resolve?

René: For the societal aspects, if we’re thinking about head-mounted devices like AR glasses, we might all remember the “glasshole” kind of thing. This is important. If you walk down the streets and with all the privacy issues we have today, we need to ensure that people feel comfortable actually wearing those. Maybe it is also the form factor that is definitely needed to get closer to a smaller form factor like my glasses I’m wearing. If you’re just listening to the podcast, you cannot see, but I’m wearing normal glasses.

Getting to this form factor where it’s less intrusive, and less kind of, “Hey, there’s a big camera recording me all the time,” it’s especially important for certain markets here in Europe, for example, like in Germany, in particular, no one would accept this here, also in a work setting, and so this is a very important aspect to solve.

Botond: I would say spatial computing is a new chance to do things right. Then what I mean right by making it more inclusive. Many technologies, and when new ages or new eras are coming, typically, the technologists, or companies, or whoever is, I would say, the wind pushers or wind makers, they say, “This is how it is.” Then there you have it. Then all of a sudden, we as users have to bend around technology, or bend around engineering, or have to adapt to it. I would say that when spatial computing comes, and this is part of my research, in fact, that’s why I’m so excited about it, we have a chance to do something different.

How can we bake into it privacy by design, or inclusion by design, and security and safety by design? I would argue that we are at a very early age, and I would say this is a high level. One of the problems is that many of our technologies are not inclusive, so I think this is a number one chance that we be more inclusive. Second, to be more specific, I would say people could finally enjoy content, who, let’s say, don’t have even hands, or people who actually don’t have certain capabilities, and they can also now have some extra or more, or even we can augment the very user, and then have an extra capability.

Then we haven’t touched yet, why is it so exciting? I believe that it’s exciting because it augments the human itself and the human intellect. I would hate to say it if it’s just another gadget where we are consuming more content instead of interacting with it and interacting with each other. When it comes to problems, I would say that, so one is inclusion, and I would say that’s a pretty big one already. Number two is to be able to actually connect with each other because right now, what we are seeing is that we are starting to separate from each other, we are within echo chambers.

I hope that spatial computing, because the content is spatial, would be a chance to connect together with other fellow citizens and people around the world. I would say that these are two, I wish it will connect, and of course, the overarching theme is human augmentation so that we can be more one with technology.

Jeff: How do you see spatial computing differ from the metaverse? Is it analogous? I think that the notion of the 3D mapping of objects and sensors, obviously that could go hand-in-hand with the metaverse but is spatial computing a broader term than the metaverse? How do you see those two being related?

Botond: Yes. The metaverse has been greatly confused by a mega company because the metaverse originally meant very, very different what Zuckerberg means by metaverse, so the very new stuff in the metaverse is very different from what Facebook says. Then I believe that even nobody wants to have any of those two, for real. I would circle back to spatial computing is a very technical term and a technical expression. I like Tony Parisi’s definition when it is just going to be the continuation of the internet, but this time spatial in a way that is really open, it’s really connecting us.

Then whatever happens in the space in a digital manner, has a very real effects on us, hence has to be very well taken care of. Hence, it’s immersive, hence, it’s joyful and augmenting the human. In other words, we are connecting the digital reality with the physical reality at once in real-time, so that we can enjoy space with digital content without having trouble, such as a heavy device, or whatnot. If that is metaverse, or game, whatever it was, of course, there is a dystopian metaverse in the book. You don’t want to have that.

There’s a marketing corporate metaverse by one company. I don’t want that either. I’m really curious what the term will be because I would bet that it’s not going to be metaverse. It’s something else, and there’s a great discussion. I would greatly advise you to follow the XR Guild in Silicon Valley. Of course, it’s a very open one. I would say that this is very early to say what it compares to. I would like to say this spatial computing is a technical description of how it functions and what it does. Then, how it will be, I don’t know yet. I don’t think anyone does.

***

Remi: I would go as far as saying that spatial computing is possibly one of the best tools we have to improve our relationship with Mother Earth and fellow inhabitants. I think the more this technology is deployed to people, the better chances we have to change our behavior. I understand that some have already given up and want to flee and establish new colonies on other planets, but I think with the right tools, we can do a much better job understanding the impact of our actions and make the right decisions. The first important step in fixing an issue is recognizing that the issue exists. If it’s not visible, maybe it doesn’t exist.

What better than a spatial computing to provide up-to-date information about how things are impacting positively or negatively the environment? This will provide a way for everyone to observe the issues that otherwise are easily hidden from our eyes. Take, for example, global warming. Buildings and construction together account for a third of carbon dioxide emissions. I would love to be able to see, while I’m visiting a facility or building, to know which part of which buildings are the least energy efficient, for example.

That would definitely impact my behavior and the places I would spend time and money in. Talking about global warming, another immediate impact of spatial computing is the capability to bring places to people rather than having to bring people to places. This means a lot less travel and transportation, since it is possible to digitize places and stream them over to wherever the users are so that we use less energy than having to be able to physically move people. Transportation is responsible for about a third of greenhouse gas emission and worldwide consumption. Another reason for why spatial computing can have a huge impact to the world.

***

Jeff: Let’s shift to the future a little bit. Why are you guys so excited about the space? What do you think some practical applications could be at home or at the office as we think about having more spatial computing? We understand the capabilities, but if we fast forward to the future, why is this so exciting? René, what are your thoughts? Maybe you could pick one, home or the office, and Botond can take the other one.

René: Yes, if I look at the professional workspace and the office, which is what I’m mainly focused on, I think we just are thinking about headsets all the time, and I don’t think this is the end goal. I’d rather see it that we have balls behind us that are basically holographic displays, or volumetric displays. I’m not going to name companies, but there are some companies that are working on these kinds of large displays.

Just think about it––you will have a meeting room in the future where the walls of volumetric three-dimensional displays where you don’t need to put on any glasses, but you can talk with your colleagues that are worldwide somewhere, and you really feel like they’re we’re sitting there. This comes straight out of certain science fiction movies, but this is happening. We already have these smaller screen sizes, these 3D volumetric displays happening at 20-inches or so, and I think now even larger, but the goal is really to build wall-sized, 3D volumetric displays, and then we will have really immersive meetings without having to put on any silly glasses.

Botond: Let’s assume that everything works out, meaning there is a great device, it’s very comfortable to wear or the interaction is nailed. The robots are really interacting with us as they should, and there is infrastructure, meaning that they can communicate with data, no latency or low latency, and so on and so forth. Let’s assume everything plays out and they have the same chance as us to understand spaces. I would say that, in this case, digital entities will be like us. Very difficult to distinguish. I would believe that we will have avatars, which are fully self-aware and interacting with spaces.

I would believe that not only that but we will be hopefully enabled to interact with content, and especially information and data, as never before. Meaning that we can interact with 1 million research answers and try to find what we really would like to pursue because today, because of the screen size, you literally get five top answers that the algorithm thinks you should be consuming. When it comes to spatial computing, now we can put content into 3D spaces. I would argue that whatever is digitally available today, I hope that in 20 years time it will be a part of our physical life in a way which is humane.

I would like to give you a very concrete example. If you think of the most concentrated spaces of information, that is a library. Yet, in a library, because it’s been through the iteration and evolution of how to interact with content, data, and information, you feel very calm. There’s a system, there was an assistant, of course, and so on and so forth. When you wanted before to go to information, then you went to a library, and then you knew that this you will find. In fact, you felt good about it. You even went to study there, so today, because of this silly little interface, it’s just not possible.

I call this the keyhole to the library where the librarian is holding the book very, very fast and then brings you the content. I think it will just unleash everything which is online, and it will materialize and be embodied. When that happens, we will be finally able to interact, and exchange, and retrieve, and have, and own this information and experience properly. At last, we will be one with the digital content, but that could have dystopian parts as well so we have to be researching and working on it.

Jeff: What about healthcare and the medical space? This notion of saving lives or doing things better, always that there’s an impetus where it’s like when technology can aid in that, then it can also be sped up. Do you have any thoughts on healthcare? I know we talked a little bit about entertainment, manufacturing, but what about that space?

René: Yes, healthcare definitely, we’re already seeing some interesting stuff happening in the healthcare space. For example, there were even the first surgeries already being done while using spatial computing devices. The benefit that the surgeons see there is already existing where basically, for example, they do some certain, let’s say a spine surgery or whatever it is, where they need to have an MRI or CT scan right next to them that they need to take a look at. Instead of looking on a 2D monitor, they can now have it with these 3D stereo glasses projected right in front of them.

It doesn’t have to be on the patient. Some solution actually projected these, let’s say the CT scan from before, right on the patient. With some extra tracking devices, you can get really to a closer precision the surgeons need, which is millimeter range or sub-millimeter range, in fact, and this is still a challenge of course. Even without overlaying directly on the patient, you can just overlay it on the site. Again, you’re wearing basically, display, semi-transparent glasses where you can still see the real world, but also these virtual objects, and you can have an infinite screen in front of you with all this information.

Another aspect in healthcare is, of course, also telemedicine, that, as you probably know, certain rural areas have a problem that there is not enough health personnel that basically lives there, and so they have a shortage for this. What we also see are solutions emerging for telemedicine and not just on the phone or on a 2D video call, but much more immersively.

Jeff: Where do you see spatial computing impacting education in the future?

Botond: A good answer or the honest answer, is that I don’t know because, right now, education is still in the Victorian setting. The teacher is here, and the people are laid out there, and then all the solutions I’m seeing currently are trying to do the same thing, but digitally. This is when a realm is changing. I think we will have to reinvent education in many ways. In fact, some of the decisions will be what needs to be taught in person and what is okay to be taught remotely or digitally. Then there is this fine line or borderline, and then there is, you mentioned, scaling. One of the biggest deals about spatial computing, that finally you can scale communication. You can scale interaction, and then telemedicine is one of the examples.

I think if we talk about 20 years out, the real question will be, as already today, is “AI teachers” or “AI-based systems.” In spatial computing, you pop on glasses, either VR or MR glasses, what’s not. I would believe that if you’re home, the teacher could come and sit down in front of you, and you would perceive the teacher as if there would be a person. That’s where it becomes a tricky question because, all of a sudden, there will be a person, or an algorithm, or whoever controlling that thing.

When it comes to education, I think education itself is going to be a hand-in-hand experiment. I don’t know where it is going. All I know is that the teachers themselves will be more and more either augmented, or helped, or replaced. Of course, these are very, very, I would say the very drastic type of thing, but technically speaking, it will be possible.

René: I just want to give one example, which I think, for me, personally, it would be really amazing. I don’t know if any one of you, probably you all had advanced math. Like linear algebra, all of this stuff. It was really dry and boring when I learned it, but it really clicked for me when I first started to do 3D computer graphics because I could use vector algebra. I could do some fancy 3D things or 2D in the beginning.

I think this is a big chance for education to motivate students more and you can give them some devices. Of course, not all day. We don’t want to sit in a dystopia with a VR headset in the classroom. This is, of course, not the vision, but for certain things, especially when, for example, they talk about math or physics, just think about it, you can visualize invisible things like a magnetic field in three dimensions, right in front of you. I’ve seen a gentleman from Japan. Physics teacher, he built a HoloLens app where he was augmenting, let’s say, a rod of metal, or iron, or something. There was a magnet, actually. He had a real magnet, and he was augmenting that. You could see the magnetic field around it, and you could also bring in other devices, it would diagonally change.

What I’m trying to say is we live in three dimensions, and we teach in two dimensions mostly, but we have the opportunity with this kind of stuff to bring the third dimension in the education and to make it much more realistic, and much more feasible, and also more interesting for students. We need students, especially in math and other categories, and STEM, as you all know, it’s a big issue all over the world, and maybe it’s part of the motivation. Let’s get folks better motivated, and they will learn for themselves because they see the benefit of it.

Jeff: It seems like spatial computing is the hybrid of being able to be disconnected and connected at the same time. I’m curious if you guys have more thoughts on that, about how the future of spatial computing helps us disconnect more from technology or just have more of our natural environment. Any other thoughts about that?

Botond: The way I see it, there is a trajectory and a trend between the human species and information at large. That before we were willing to take the ride and the drive to the information centers, be it, back then, the market, where you would get the fresh news, or, of course, once a week, to the church, and then the library, and all that. Later, you went to the newsstand to get the information, but then came the radio so then you had a radio at home now, the television. All of a sudden, the interface to information, which was prior, let’s say books or another person when there were no books, now it’s a mobile device.

Now as we’re talking about throughout spatial computing, that it’s actually migrating closer and closer to your head. I’m afraid to say it, but I’m just seeing it, that we humans are keen to be closer and closer to information, hence, disconnecting will be a bigger and bigger problem, or let’s be positive, bigger and bigger challenge.

On that note, I would say that when I can take off my glasses or whatever device, and I can disconnect and it is just that easy, throw away your phone for a few hours, and it’s just that easy. It’s not even on your body, or you will have rings, or whatever on your body, or even your clothes could be spatially aware.

I’m saying, does spatial computing as a phenomena help us to disconnect from technology? I would say no. Will it help us to get rid of devices and then reduce the machine stuff? I would say probably because just like the very mobile phone––now you don’t have a flashlight and so on, you have so many things you don’t have because your phone does it. Is it a good thing? I don’t know, but, right now there are companies drilling your skull and then wanting to have the thing in your head already. The real question is what do we humans want to do with this possibility to connect and interact with information?

What I’m seeing and observing, we’re just so keen to connect to it and be with it. Maybe one day, we’ll be just one, and then the Fermi Paradox is real. People just said, “Okay, let’s just be in a box somewhere in space.” Then, I don’t know. Short answer, I don’t think spatial computing is helping us to disconnect from technology or the digital world at large.

I see a great chance that technology will be more humane. Let’s say I don’t need to have a screen just to interact with something or someone. I don’t need to carry a heavy device. I don’t need to have a desktop computer to do something, which I can only do on the desktop computer.

I will say that the promise of this spatial computing is to, at last, make the digital world and everything around it more humane, human-centric, and then compatible with us.

***

Remi: 20 years is a long time considering how fast and exponentially faster technology is evolving. Things like quantum computing applications, some maybe sentient AI, brain-computer interfaces. We’re all going to have a huge impact on everything, including our spatial company. I won’t adventure so far on my crystal ball. I would stay within the next decade. I’m not willing to wait 20 years to experience a good spatial computing application.

As far as visual computing being used at home or the office, the answer is oh yes, it will be used at both places, just like the mobile phone is commonly used in both places. Maybe introduced in one and brought to the office, or the other way around, but it will be used everywhere.

On the technology side, I would like to talk about a difference between see-through and pass-through technology. See-through technology is using lenses that allow direct observation of the real world through the lenses while inserting additional virtual objects in the user’s field of view. Video pass-through, on the other hand, digitalizes, in real-time, the world around you, before merging it with virtual objects and presenting the user with the pure synthetic image.

Going back to the example where virtual objects are added to the real world for testing purposes, we can observe that this scenario can be implemented whether using see-through or pass-through. However, I want to point out the best depravity that pass-through has. With see-through, it is possible to add on top of reality, but not enter reality.

For example, in order to study the impact of adding a new object in a room, it is important to visualize the impact on the overall lighting. The other object will add its shadow to the real world. It will add its reflection to the world mirrors and shiny surfaces, potentially outlining into the real world.

As you can see, it’s not possible to correctly adjust your object into the real world without disturbing the entire thing. Another example is the capability, especially when using contracts from the AI, capability for video pass-through to filter out reality. There are already applications that exist where all the furniture can be removed from a room without physically moving anything. This allows the user to test out new furnishings without having the inconvenience of having already furniture in the room and see-through technology can’t do that.

In short, pass-through provides the user with superpower, so I would predict that pass-through would be the dominant technology in spatial computing in the future. This said, it will take some time to figure out how to build wearable pass-through devices, so I expect to see more see-through devices in the near future, but in the longer term, I would think they will be replaced by pass-through devices.

Other than pure technology conversation, the main improvement I would expect during the next decade is the inclusion of people interaction into spatial computing.

Collaboration and sharing spaces are essential for any kind of technology to restore usefulness. This will enable bringing several people to the same physical location at the same time, and so, real-time interaction with the real world and real people. This can be referred to as virtual teleportation, where several people can be teleported into a real location.

For example, this can be used to bring an expert to observe and help with an issue happening in the real world, or just to bring many people together to experience an event such as a concert. I would think that virtual teleportation has the potential to be the killer app of pass-through mixed reality and spatial computing.

***

Jeff: What about some of the ethical dilemmas? You guys mentioned security, privacy. What are some of those things and designing everything with intent now, while it’s in the formation stage, what are some of those dilemmas that we need to be mindful of, and put a lot of energy into?

René: Yes, privacy first. This needs to be one of the first things when designing solutions. We need to think about it, especially when we’re thinking about spatial computing and basically, it’s driven by computer vision, which means analyzing camera frames. You want to make sure that when you do this, like when you use your phone or whatever device you have to scan an environment around you, that this data is, first of all, securely stored, if at all the storing of the data is needed, and that no one can do malicious things with it.

If I look at certain large tech players where the main business model is advertisements, and where the revenue comes from, I don’t know if I would trust them.

Trust will become a really, really important thing, even more than it is today, where it’s already very important. One example to give is Microsoft Azure Spatial Anchors technology is one implementation where you can put virtual anchors in the real world. I know the team pretty well, the research team. They’re based out of Zurich, and they put a huge emphasis on it, if you look at some of the papers from computer vision conferences, on privacy. What they do is when you scan the environment around you, they process the camera frames locally on the device, and they don’t send the camera frames into the cloud and store it there, they only extract the relevant features, so-called feature points.

They’re not even showing the future points as they are, they’re storing them in a kind of an obfuscated way, so that it’s pretty much impossible to reverse engineer from the data that is stored in order to, later on, localize, to get to the real world situation.

These things need to be key because otherwise, this will just fail because we need to make sure this is a secure space where privacy is also maintained. I’m not even getting into the whole aspect of avatars. This is, of course, a different part. I’m just talking about the real spatial computing aspect of this, but yes, this is huge.

This is a very important part of the topic that we also probably need some policies and constraints that will ensure that folks don’t get held up with this.

Jeff: Do you know of any groups that are working on those policies right now?

René: Yes. For example, you have the Metaverse Foundation, Europe. This is one that was just founded. They’re looking into how to make sure that these metaverse solutions will maintain certain values that are coming to Europe, for example, like privacy, security, and whatnot. Of course, there’s other standard forums that are evolving like the Metaverse Standards Forum, mainly driven by The Khronos Group and a couple of others.

Yes, there’s, fortunately, a lot of folks are thinking about it.

Botond: Actually, just to add two more, I would say associations or formations are the OpenXR and the XR Guild. I would just say that there are more and more and more groups popping up saying, “Hey, values first. What are the philosophies, and what are the things we want to achieve with this?” Then, let’s try to make norms, rules, and so on, and so forth, and policies to get it right or at least give a chance to it because nobody really knows how it is going to evolve, and how the consumer wants to use it at last.

Maybe there’s one very specific example, which I would be more worried about because today, there is an understanding, when do you give a mobile phone to your child? That means, when do you put into his or her hand the digital connection to everything else? Of course, you say, “Okay, I’m going to limit it,” so up to a certain age, whatever that age is, he or she won’t get that kind of access. When you’re going to put glasses, VR headsets on a child, their fairy tales are a thing. Then, of course, right now we are laughing, “Oh, there is a fairy,” and they believe there is a fairy. Now, in this time around, give it 20 years, that fairy will be not only indistinguishable from a bird, but it will be more intelligent than any human because it will have the AI capability, it will understand, it will emphasize, it can manipulate.

All of a sudden, that kind of digitality, or digital reality becomes reality, and let’s say, us, we are grownups, but trust me, when you’re driving and a child walks in front of you, your basic instinct will be just.

Hacking a person will be even more devastating, but let’s say that you already have a security system, but when do you give your glasses to your child so that kind of fairy tales in the books and television becomes reality? I would argue that some child will say, “I don’t want to have the real dog. I just want to have a digital dog” for certain reasons.

You don’t have to take it down to walk, for instance, and it knows a lot more. Then it becomes basically your memory of avatar or whatnot. I would say that our humongous, ethical, and even evolutionary questions and challenges because it will tweak the course, what we are going walk down from then on, because, right now, you can close a book, you can finish a film-

Jeff: Shut a device.

Botond: ––you could just shut the device, or you can shut the experience. Let’s say, theater is also virtualized storytelling, but with glasses or VR headsets or what-not, but I believe glasses will ultimately be VR headsets also, it’s a very hard one to take it off and say, “I’m going to just leave it behind.” I argue that the biggest challenges there, when and how we are going to introduce it to our daily life and especially our children.

Jeff: Putting it in the context of kids is a great example for us to be diligent about how we prepare for those issues.

As a final question here, what advice would you give to your kids, if you have kids or maybe it could be your students. What advice would you give to them for this future?

René: I actually have five kids, in fact, yes. I’m very aware of the issues that are rising with all of this. My advice for the kids is, first of all, they have smartphones, the older ones. We lock them down quite a bit, and I use applications to control it a little bit so that they don’t spend too much time, but the advice for them, don’t be afraid too much, but also be mindful of what you share online because everything you share online, everything you record, everything you post in social media, you never know who’s going to use it and you never know what’s going to happen with it.

That is the case right now. That will always be the case and will just be even more important in the future. You have to be mindful with these devices, what they can do. They can create great content. I always tell them, “Hey, of course, take photos, do videos, whatever, do content creation. Don’t just consume. Don’t just use these applications to consume because these applications are fantastic and these devices are fantastic for content creation. Be creative and use them as a tool instead of just as a content consumption mechanism.”

Botond: To follow on René’s thoughts, creation and then focus anything which is around creation of either the content, or thoughts, or just communication. I would say the creative industry has a humongous opportunity right here because this has to be reinvented, formalized, made. Having said that, if it’s a young student, let’s say in his or her 20s, I would say, try to pick and follow those tools and understand those tools and how they work, which make this possible. 30, 40 years ago you would say coding, and then people didn’t pick it up because what is it for?

If you could roll back, and even at a certain point in time, reading and writing was a technology. At this time I would believe the tools which enable the user to create and express his or herself so that they don’t become just pushed onto them and they become consumers, but they can also express themselves so they can extend themselves.

Jeff: That’s great. I love how we’re getting it back to these nuggets, which is where technology can help us or it can hurt us. We talked about these two opposites here. If you think about our purpose, like, in life, it seems like as human beings, this notion to create, this notion to connect with other human beings, the relationship side, the creation side, the knowledge––gathering knowledge and becoming smarter about things, that’s where technology has so much potential.

On the flip side, it can hurt us. If we’re just consuming things, it could hurt our relationships if we’re not getting out and connecting with people also in person. It could hurt our knowledge or our ability to create if we’re just consuming.

I think that if we think about the potential here, it’s the potential for greatness. It’s also the potential for hurt, and that’s why it’s so important to be talking about this now in saying, “How do we participate and make an impact such that we can see more of the benefits versus the cons that could hurt the capability of the future?”

It’s great chatting with you both, gathering your insights, gathering all these nuggets. I think there are some really profound comments and really appreciate you being on the show.

Botond: Thank you so much for having us.

René: Yes, thank you so much for having us. It was a great conversation, and I think we could talk for many more hours.

***

Jeff: The Future Of podcast is brought to you by Fresh Consulting. To find out more about how we pair design and technology together to shape the future, visit us at freshconsulting.com. Make sure to search for The Future Of in Apple Podcasts, Spotify, Google Podcasts, or anywhere else podcasts are found. Make sure to click Subscribe so you don’t miss any of our future episodes. On behalf of our team here at Fresh, thank you for listening.